BIG-IP LTM offers a many load balancing methods to choose from:

- Static – these methods do no take into consideration the server performance

- Round robin

- Ratio

- Dynamic – take into consideration server performance

- Least connections

- Fastest

- Observed

- Predictive

- Dynamic ratio

- It is important to note that LB distributes the request on available servers only. Server availability is determined by administrator and monitor status by both node and member.

Round robin – is the most common and default LB method. Client requests are distributed evenly.

Ratio – this mode is good to use when some of the servers are more powerful than others.

Least connections – next request to the device with the fewest open connections. In case server got same number of connections, LB will round robin between them.

Fastest – next request to member with best layer 7 request. Why L7 and not ping or syn? Because ping nor syn dont take into consideration application/DB servers.

Observed – it is basically ratio LB but with ratio assigned by LB. The ratio assigned is based on current connection count. Members with fewer connections got bigger ratio (Less connections ratio 3, More connections ratio 2). These ratios are dynamically reassigned by LB every second.

Predictive – is same as Observed method, however it assign the ratios with more aggressive way (Less connections ratio 4, More connections ratio 1)

Member vs Node

You can choose load balancing algorithm to take into consideration NODE statistics or Pool member statistics. In case of NODE, LB will take into consideration only IP address. In case of Pool member, LB will take into consideration IP address and port number.

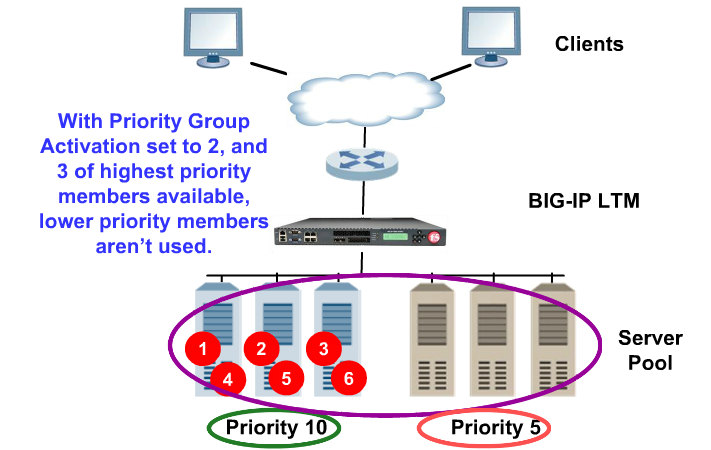

Priority group activation

Priority group activation allows administrator to define preferred and backup sets of pool members within a pool. With the Priority Group Activation feature, you can specify the minimum number of members that must remain available in each priority group in order for traffic to remain confined to that group. This feature is used in tandem with the Priority Group feature for individual pool members. If the number of available members assigned to the highest priority group drops below the number that you specify, Local Traffic Manager distributes traffic to the next highest priority group, and so on. The configuration shown in Figure 4.1 has three priority groups, 3, 2, and 1, with the Priority Group Activation value (shown as min active members) set to 2.

pool my_pool {

lb_mode fastest

min active members 2

member 10.12.10.7:80 priority 3

member 10.12.10.8:80 priority 3

member 10.12.10.9:80 priority 3

member 10.12.10.4:80 priority 2

member 10.12.10.5:80 priority 2

member 10.12.10.6:80 priority 2

member 10.12.10.1:80 priority 1

member 10.12.10.2:80 priority 1

member 10.12.10.3:80 priority 1

}

Fallback Host feature (http only)

If all members of pool are down, LB can send HTTP redirect instead of no response at all.